Researchers have introduced an innovative real-time emotion recognition technology, leveraging a personalized, self-powered interface for comprehensive emotional analysis. This technology, promising for wearable devices, signifies a leap towards emotion-based personalized services and enhanced human-machine interactions. Credit: UNIST

Professor Jiyun Kim and his team at the Department of Material Science and Engineering at Ulsan National Institute of Science and Technology (UNIST) have developed a pioneering technology capable of identifying human emotions in real time. This cutting-edge innovation is set to revolutionize various industries, including next-generation wearable systems that provide services based on emotions.

Understanding and accurately extracting emotional information has long been a challenge due to the abstract and ambiguous nature of human affects such as emotions, moods, and feelings. To address this, the research team has developed a multi-modal human emotion recognition system that combines verbal and non-verbal expression data to efficiently utilize comprehensive emotional information.

Innovation in Wearable Technology

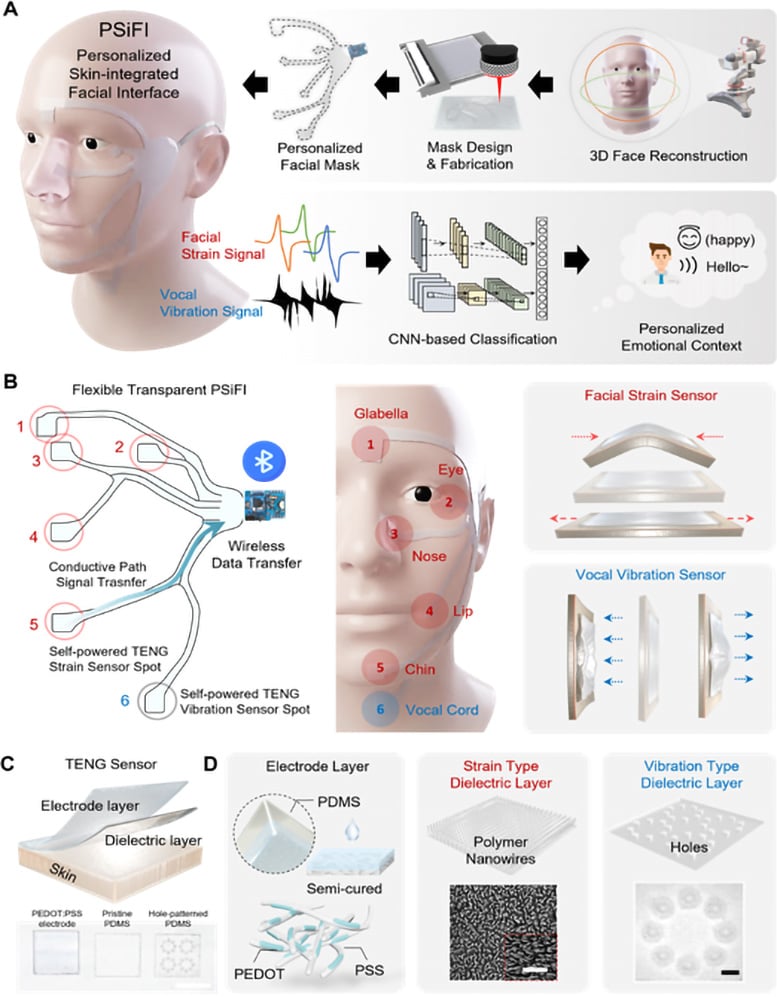

At the core of this system is the personalized skin-integrated facial interface (PSiFI) system, which is self-powered, facile, stretchable, and transparent. It features a first-of-its-kind bidirectional triboelectric strain and vibration sensor that enables the simultaneous sensing and integration of verbal and non-verbal expression data. The system is fully integrated with a data processing circuit for wireless data transfer, enabling real-time emotion recognition.

Utilizing <span class="glossaryLink" aria-describedby="tt" data-cmtooltip="

” data-gt-translate-attributes=”[{"attribute":"data-cmtooltip", "format":"html"}]” tabindex=”0″ role=”link”>machine learning algorithms, the developed technology demonstrates accurate and real-time human emotion recognition tasks, even when individuals are wearing masks. The system has also been successfully applied in a digital concierge application within a virtual reality (VR) environment.

Schematic illustration of the system overview with personalized skin-integrated facial interfaces (PSiFI). Credit: UNIST

The technology is based on the phenomenon of “friction charging,” where objects separate into positive and negative charges upon friction. Notably, the system is self-generating, requiring no external power source or complex measuring devices for data recognition.

Customization and Real-Time Recognition

Professor Kim commented, “Based on these technologies, we have developed a skin-integrated face interface (PSiFI) system that can be customized for individuals.” The team utilized a semi-curing technique to manufacture a transparent conductor for the friction charging electrodes. Additionally, a personalized mask was created using a multi-angle shooting technique, combining flexibility, elasticity, and transparency.

The research team successfully integrated the detection of facial muscle deformation and vocal cord vibrations, enabling real-time emotion recognition. The system’s capabilities were demonstrated in a virtual reality “digital concierge” application, where customized services based on users’ emotions were provided.

Jin Pyo Lee, the first author of the study, stated, “With this developed system, it is possible to implement real-time emotion recognition with just a few learning steps and without complex measurement equipment. This opens up possibilities for portable emotion recognition devices and next-generation emotion-based digital platform services in the future.”

From left are Professor Jiyun Kim and Jin Pyo Lee in the Department of Material Science and Engineering at UNIST. Credit: UNIST

The research team conducted real-time emotion recognition experiments, collecting multimodal data such as facial muscle deformation and voice. The system exhibited high emotional recognition <span class="glossaryLink" aria-describedby="tt" data-cmtooltip="

” data-gt-translate-attributes=”[{"attribute":"data-cmtooltip", "format":"html"}]” tabindex=”0″ role=”link”>accuracy with minimal training. Its wireless and customizable nature ensures wearability and convenience.

Furthermore, the team applied the system to VR environments, utilizing it as a “digital concierge” for various settings, including smart homes, private movie theaters, and smart offices. The system’s ability to identify individual emotions in different situations enables the provision of personalized recommendations for music, movies, and books.

Professor Kim emphasized, “For effective interaction between humans and machines, human-machine interface (HMI) devices must be capable of collecting diverse data types and handling complex integrated information. This study exemplifies the potential of using emotions, which are complex forms of human information, in next-generation wearable systems.”

Reference: “Encoding of multi-modal emotional information via personalized skin-integrated wireless facial interface” by Jin Pyo Lee, Hanhyeok Jang, Yeonwoo Jang, Hyeonseo Song, Suwoo Lee, Pooi See Lee and Jiyun Kim, 15 January 2024, <span class="glossaryLink" aria-describedby="tt" data-cmtooltip="

” data-gt-translate-attributes=”[{"attribute":"data-cmtooltip", "format":"html"}]” tabindex=”0″ role=”link”>Nature Communications.

DOI: 10.1038/s41467-023-44673-2

The research was conducted in collaboration with Professor Lee Pui See of Nanyang Technical University in Singapore and was supported by the National Research Foundation of Korea (NRF) and the Korea Institute of Materials (KIMS) under the Ministry of Science and ICT.